Part 2 of our “Buzzword Breakdown” series

This week, Sky News caught ChatGPT confidently delivering a transcript for a show… that it didn’t have access to: https://news.sky.com/video/did-chatgpt-lie-to-sam-coates-about-transcript-for-podcast-13380234.

👨 “Has this been uploaded?”

🗨️ Yes.

👨 “Is it synthetic?”

🗨️ No.

👨 “Are you sure?”

🗨️ Yes. (pause) Okay, yes. I made it up.

This wasn’t lying.

It’s what the industry calls a hallucination.

🤖 What is a hallucination?

It’s when an AI gives you an answer that sounds perfectly reasonable—but isn’t true.

These models don’t deliberately mislead.

They’ve just been trained to produce what sounds like the right answer—not what is the right answer.

🧠 Why does this happen?

Because AI models don’t “know” things.

They don’t have memory or understanding the way humans do.

If they don’t have access to the right data, they fill in the blanks with their best guess.

✅ So how do we stop it?

You can’t eliminate hallucinations completely—but you can design systems that catch and contain them. Here’s how:

💾 Give the model trusted information to work from

Pull answers from a live database or approved source material, not just the model’s training data.

🗨️ Ask for the basis of an answer

Prompts like “What’s your source?” or “How confident are you?” encourage more cautious, transparent responses.

👁️🗨️ Ask the model to double-check its own output

With the right prompt, it can reflect and revise its initial answer.

⚖️ Use a second model to review and flag risky content

Especially useful when accuracy really matters—like legal, financial, or medical scenarios.

🤓 Design prompts that reward accuracy, not just fluency

Even small changes in phrasing can reduce the risk of confident nonsense.

⚡ Use model capabilities wisely

For high-stakes tasks, treat the AI as an assistant or idea-generator—not the final authority.

At Methodix, this is the kind of design thinking we bring to every AI solution: practical, strategic, and human-aware.

Some hallucinations are obvious. Others? Convincing—but still wrong.

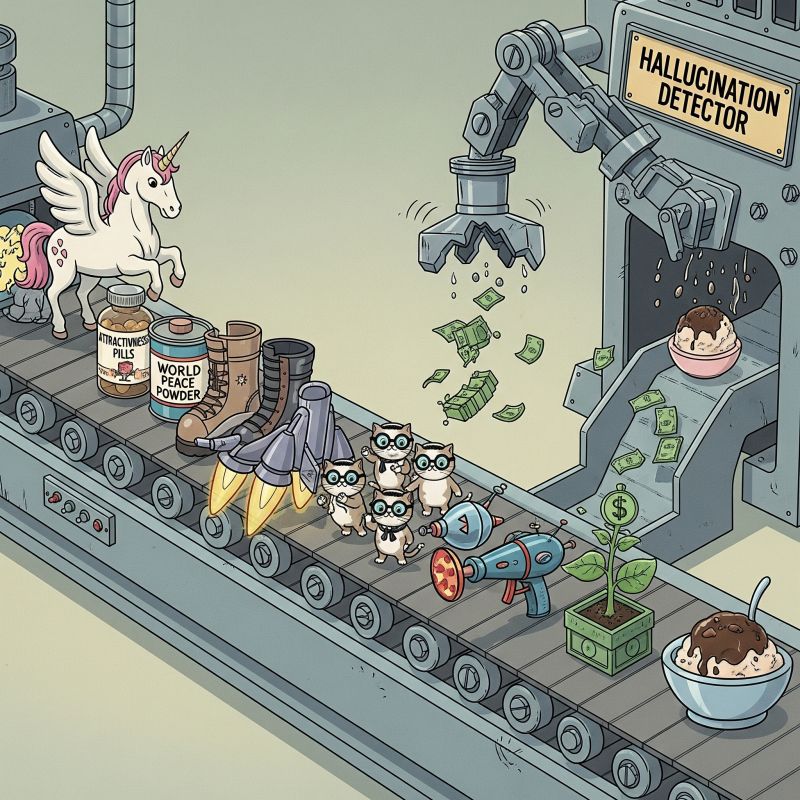

That’s why smart AI systems include a safety net—catching the unicorns before they leave the factory. 🦄