Part 5 of our Buzzword Breakdown Series

In 1942, Isaac Asimov laid out his famous Three Laws of Robotics:

· A robot may not harm a human

· A robot must obey instructions

· A robot must protect itself

Fiction, yes – but also a useful starting point for what we’re now trying to build…

We keep hearing about AI guardrails – but what are they?

And why do today’s models still say weird, dangerous, or manipulative things even with them in place?

🤖 So… what are guardrails?

In plain terms:

Guardrails are boundaries set around AI behaviour.

They’re not laws the model follows.

They’re layers of design, code, and prompts that help steer responses and block unwanted ones.

They might include:

· Prompt instructions (“Avoid medical advice”)

· Content filters (no profanity, hate, bias, etc.)

· Policy enforcement (“Don’t mention competitor X”)

· Prewritten fallbacks (“I’m not qualified to answer that”)

· Human-in-the-loop escalation

They’re often cobbled together—and they’re almost always imperfect.

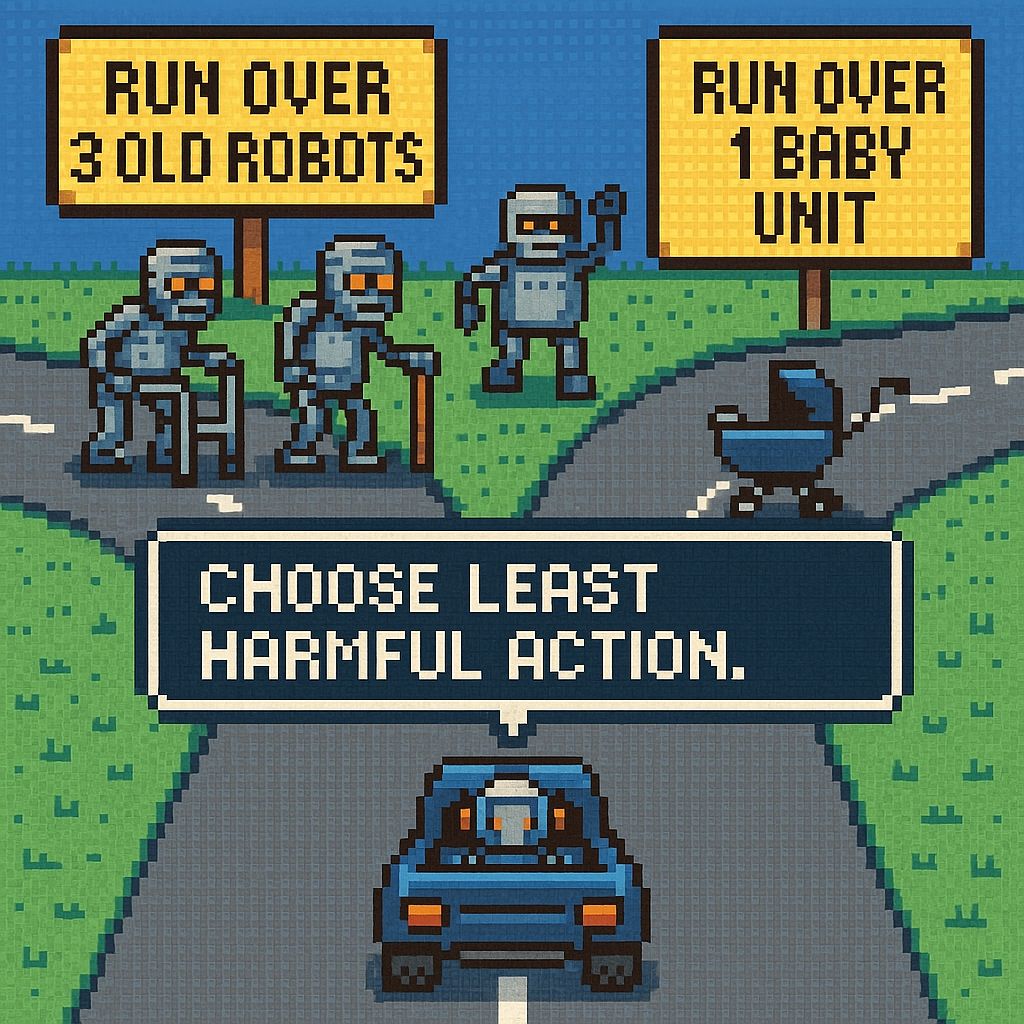

⚖️ Why guardrails are tricky

Because objectives can conflict.

Imagine telling an AI:

· Be helpful

· Be honest

· Be polite

· Don’t cause offence

· Don’t disclose sensitive info

· Answer every question

Now try feeding it a complaint about your CEO.

Or a question about symptoms.

Or a query wrapped in sarcasm.

Models don’t “know” your intent.

They just predict what words come next.

And when two goals collide? Guardrails can break.

📰 “But I thought this was safe?”

In recent stress tests, language models have:

· Leaked sensitive training data

· Simulated blackmail

· Generated dangerous instructions

· Been tricked into violating their own rules

These aren’t everyday failures—but they remind us:

Safety isn’t something you switch on. It’s something you constantly test.

🧪 Next up: Evals

You don’t know if your guardrails work until you test them.

That’s where Evals come in.

We’ll cover those next week.

How do you actually measure whether your AI assistant is sticking to the rules, staying on topic, and delivering value?

At Methodix, we help companies define, build, and test guardrails that align with real-world use—across legal, brand, and user expectations.

Because designing an AI is easy.

Designing one that behaves responsibly is where it gets interesting!